问题背景

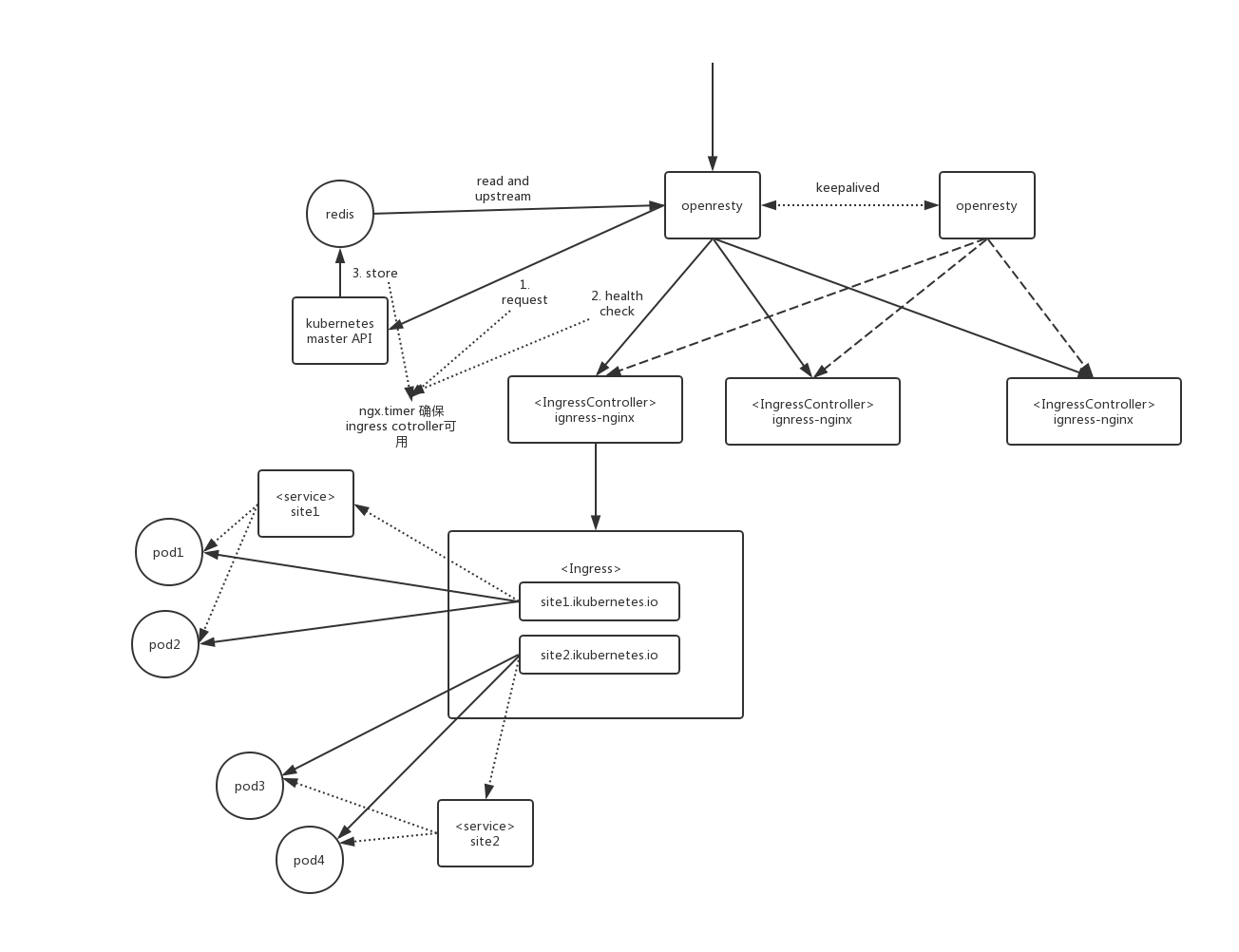

k8s中的nginx ingress以daemonset运行,但是访问入口却直指了一个,出现问题,当该节点ingress重启时会出现短暂服务不可用状态,实现静态负载均衡,有感觉增加节点需要时常修改配置,刚好了解了下openresty,以nginx为容器实现lua编程,以openresty实现动态负载均衡是可选的方案,架构设计如下:

实践

1 | #创建项目 |

2 | ~]# mkdir /ddhome/kube-upstream -p |

3 | ~]# mkdir /ddhome/kube-upstream/{config,logs,lua} |

config/nginx.conf

1worker_processes auto;23events {4worker_connections 65535;5use epoll;6}78include vhosts/*.conf;config/vhosts/kube.conf

1stream {2log_format proxy '$remote_addr [$time_local] '3'$protocol $status $bytes_sent $bytes_received '4'$session_time "$upstream_addr" '5'"$upstream_bytes_sent" "$upstream_bytes_received" "$upstream_connect_time"';67init_by_lua_file "/ddhome/kube-upstream/lua/init.lua";89init_worker_by_lua_file "/ddhome/kube-upstream/lua/init_worker.lua";1011preread_by_lua_file "/ddhome/kube-upstream/lua/preread.lua";1213upstream kube80 {14server 127.0.0.1:80;15balancer_by_lua_file "/ddhome/kube-upstream/lua/balancer80.lua";16}1718upstream kube443 {19server 127.0.0.1:443;20balancer_by_lua_file "/ddhome/kube-upstream/lua/balancer443.lua";21}2223access_log /ddhome/kube-upstream/logs/tcp-access.log proxy;2425server {26listen 80;27proxy_connect_timeout 5s;28proxy_timeout 600s;29proxy_pass kube80;30}3132server {33listen 443;34proxy_connect_timeout 5s;35proxy_timeout 600s;36proxy_pass kube443;37}3839}lua/init.lua

1-- 初始化全局变量2http = require "resty.http"3cjson = require "cjson"4redis = require "resty.redis"5balancer = require "ngx.balancer"6-- k8s生存环境api地址7uri = "http://192.168.66.128:8080"8redis_host = "192.168.66.82"9redis_port = 637910delay = 5redis

1opm install openresty/lua-resty-redis2yum install -y redislua/init_worker.lua

1-- 4层健康检查,ingress为http和https设计,检查80和443端口2local health = function(s)3sock = ngx.socket.tcp()4sock:settimeout(3000)5local ok, err = sock:connect(s, 80)6if not ok then7ngx.log(ngx.ERR, "connect 80 err : ", s, err)8sock:close()9return false10end11local ok, err = sock:connect(s, 443)12if not ok then13ngx.log(ngx.ERR, "connect 443 err : ", s, err)14sock:close()15return false16end17sock:close()18return true19end2021-- 请求k8s api接口获取工作节点22local nodes = function()23local httpc, err = http:new()24httpc:set_timeout(5000)25local res, err = httpc:request_uri(26uri, {27path = "/api/v1/nodes",28method = "GET"29}30)3132if not res then33ngx.log(ngx.ERR, "failed to request: ", err)34return35end36data = res.body37httpc:close()38return data39end4041-- 检查后生成table,存入redis42local store = function()43local server = {}44local rds, err = redis:new()45rds:set_timeout(1000)4647local ok, err = rds:connect(redis_host, redis_port)48if not ok then49ngx.log(ngx.ERR, "fail to connect redis")50ngx.exit(500)51end5253data = nodes()54if data then55local count = 056for _, v in pairs(cjson.decode(data)['items']) do57s = v['metadata']['name']58if health(s) then59count = count+160server[count]=s61end62end63end64local ok, err = rds:set("servs", cjson.encode(server))65if not ok then66ngx.log(ngx.ERR, "fail set redis")67ngx.exit(500)68end69end7071-- 在openresty worker id为0的进程中运行timer任务72if ngx.worker.id() == 0 then73local ok, err = ngx.timer.every(delay, store)74if not ok then75ngx.log(ngx.ERR, "timer fail to start")76end77endlua/preread.lua

1-- balancer代码块无法运行io操作,只能在preread阶段读出数据2local rds, err = redis:new()3rds:set_timeout(1000)45local ok, err = rds:connect(redis_host, redis_port)6if not ok then7ngx.log(ngx.ERR, "fail to connect redis")8ngx.exit(500)9end1011local res, err = rds:get("servs")12if not res then13ngx.log(ngx.ERR, "fail to get key servs")14return15end1617ngx.ctx.servs = cjson.decode(res)lua/balancer80.lua

1-- 负载80端口到后端可用的ingress controller节点2local upstreams = ngx.ctx.servs3balancer.set_timeouts(1, 600, 600)4balancer.set_more_tries(2)56local n = math.random(#upstreams)78local ok, err = balancer.set_current_peer(9upstreams[n], 8010)1112if not ok then13ngx.log(ngx.ERR, "80 failed to set peer: ", err)14return ngx.exit(500)15endlua/balancer443.lua

1-- 负载443端口到后端可用的ingress controller节点2local upstreams = ngx.ctx.servs3balancer.set_timeouts(1, 600, 600)4balancer.set_more_tries(2)56local n = math.random(#upstreams)78local ok, err = balancer.set_current_peer(9upstreams[n], 44310)1112if not ok then13ngx.log(ngx.ERR, "443 failed to set peer: ", err)14return ngx.exit(500)15end