Docker 网络

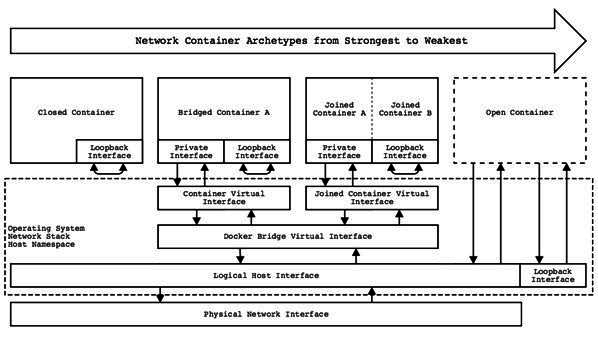

当我们启动Docker daemon服务的时候默认会出现一个docker0的虚拟网卡,在虚拟化网络的时候我们已经明确知道这是网桥,我们启动一个容器的时候,默认会有虚拟网络桥到这块网卡,并形成nat网络,而docker默认创建的网络都是nat网络,我们常见的docker网络如下图所示:

对比上图我们可见docker存在4种网络模型,按照docker给的名称为:host模式,container模式,none模式,bridge模式。

host模式

host模式启动的dokcer 容器不会单独获得一个独立的Network NameSpace,而是与宿主机公用同一Network NameSapce,也就是容器没有自己的网卡,我们在容器看到的网卡会与宿主机的网络配置一摸一样,这种模式下容器与宿主机共用同一个TCP/IP协议栈,而我们访问容器中启动的服务,只需要访问宿主的ip和端口就可以访问服务,不需要做网络装换,就如同跑在宿主机上,但是容器的其他的NameSpace还是被隔离的。

1 | [root@INIT docker]# docker run -it --name test --rm --net=host centos /bin/bash |

2 | [root@INIT /]# |

3 | [root@INIT /]# ps |

4 | PID TTY TIME CMD |

5 | 1 ? 00:00:00 bash |

6 | 14 ? 00:00:00 ps |

7 | [root@INIT /]# ip a |

8 | 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN |

9 | link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 |

10 | inet 127.0.0.1/8 scope host lo |

11 | valid_lft forever preferred_lft forever |

12 | inet6 ::1/128 scope host |

13 | valid_lft forever preferred_lft forever |

14 | 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 |

15 | link/ether 00:1c:42:6b:bd:25 brd ff:ff:ff:ff:ff:ff |

16 | inet 10.211.55.6/24 brd 10.211.55.255 scope global dynamic eth0 |

17 | valid_lft 1407sec preferred_lft 1407sec |

18 | inet6 fdb2:2c26:f4e4:0:21c:42ff:fe6b:bd25/64 scope global noprefixroute dynamic |

19 | valid_lft 2591676sec preferred_lft 604476sec |

20 | inet6 fe80::21c:42ff:fe6b:bd25/64 scope link |

21 | valid_lft forever preferred_lft forever |

22 | 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP |

23 | link/ether 02:42:ef:69:ff:b0 brd ff:ff:ff:ff:ff:ff |

24 | inet 172.17.0.1/16 scope global docker0 |

25 | valid_lft forever preferred_lft forever |

26 | inet6 fe80::42:efff:fe69:ffb0/64 scope link |

27 | valid_lft forever preferred_lft forever |

28 | 11: veth12a4b18@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP |

29 | link/ether 32:9b:98:19:ed:c7 brd ff:ff:ff:ff:ff:ff link-netnsid 0 |

30 | inet6 fe80::309b:98ff:fe19:edc7/64 scope link |

我们从宿主机看下容器:

1 | CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES |

2 | fd905c8a66d9 centos "/bin/bash" 30 seconds ago Up 29 seconds test |

3 | 88cfec2bb879 centos:latest "/bin/bash" 46 hours ago Up 46 hours centos7 |

4 | [root@INIT ~]# ifconfig |

5 | -bash: ifconfig: command not found |

6 | [root@INIT ~]# ip a |

7 | 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN |

8 | link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 |

9 | inet 127.0.0.1/8 scope host lo |

10 | valid_lft forever preferred_lft forever |

11 | inet6 ::1/128 scope host |

12 | valid_lft forever preferred_lft forever |

13 | 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 |

14 | link/ether 00:1c:42:6b:bd:25 brd ff:ff:ff:ff:ff:ff |

15 | inet 10.211.55.6/24 brd 10.211.55.255 scope global dynamic eth0 |

16 | valid_lft 1391sec preferred_lft 1391sec |

17 | inet6 fdb2:2c26:f4e4:0:21c:42ff:fe6b:bd25/64 scope global noprefixroute dynamic |

18 | valid_lft 2591660sec preferred_lft 604460sec |

19 | inet6 fe80::21c:42ff:fe6b:bd25/64 scope link |

20 | valid_lft forever preferred_lft forever |

21 | 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP |

22 | link/ether 02:42:ef:69:ff:b0 brd ff:ff:ff:ff:ff:ff |

23 | inet 172.17.0.1/16 scope global docker0 |

24 | valid_lft forever preferred_lft forever |

25 | inet6 fe80::42:efff:fe69:ffb0/64 scope link |

26 | valid_lft forever preferred_lft forever |

27 | 11: veth12a4b18@if10: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP |

28 | link/ether 32:9b:98:19:ed:c7 brd ff:ff:ff:ff:ff:ff link-netnsid 0 |

29 | inet6 fe80::309b:98ff:fe19:edc7/64 scope link |

30 | valid_lft forever preferred_lft forever |

31 | [root@INIT ~]# brctl show |

32 | bridge name bridge id STP enabled interfaces |

33 | docker0 8000.0242ef69ffb0 no veth12a4b18 |

我们在容器上启动一个httpd,并没有进行端口映射:

1 | [root@INIT /]# yum install -y httpd |

2 | [root@INIT /]# echo "hello docker" > /var/www/html/index.html |

3 | [root@INIT /]# httpd -k start |

4 | [root@INIT /]# ss -ntlp |

5 | State Recv-Q Send-Q Local Address:Port Peer Address:Port |

6 | Cannot open netlink socket: Permission denied |

7 | LISTEN 0 0 *:22 *:* |

8 | LISTEN 0 0 127.0.0.1:25 *:* |

9 | LISTEN 0 0 :::80 :::* users:(("httpd",pid=105,fd=4)) |

10 | LISTEN 0 0 :::22 :::* |

11 | LISTEN 0 0 ::1:25 :::* |

访问容器里的httpd:

1 | ~]# curl 10.211.55.6 |

2 | hello docker |

container模式

这个模式指定一个新的容器和一个已经存在的一个容器共享一个Network NameSpace,新创建的容器不会有网卡,我们能看见的是我们指定共享容器的网络信息,同样的,两个容器除了网络方面,其他的NameSpace还是隔离的,两个容器不同的应用可以通过lo网卡设备进行通信。

1 | [root@INIT docker]# docker run -it --name test --rm --net=container:centos7 centos /bin/bash |

2 | [root@88cfec2bb879 /]# |

3 | [root@88cfec2bb879 /]# ifconfig |

4 | eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 |

5 | inet 172.17.0.2 netmask 255.255.0.0 broadcast 0.0.0.0 |

6 | inet6 fe80::42:acff:fe11:2 prefixlen 64 scopeid 0x20<link> |

7 | ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet) |

8 | RX packets 10458 bytes 15276631 (14.5 MiB) |

9 | RX errors 0 dropped 0 overruns 0 frame 0 |

10 | TX packets 5271 bytes 288229 (281.4 KiB) |

11 | TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

12 | |

13 | lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 |

14 | inet 127.0.0.1 netmask 255.0.0.0 |

15 | inet6 ::1 prefixlen 128 scopeid 0x10<host> |

16 | loop txqueuelen 0 (Local Loopback) |

17 | RX packets 0 bytes 0 (0.0 B) |

18 | RX errors 0 dropped 0 overruns 0 frame 0 |

19 | TX packets 0 bytes 0 (0.0 B) |

20 | TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

取被共享的容器中查看下网卡信息:

1 | root@INIT ~]# docker exec centos7 ifconfig |

2 | eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 |

3 | inet 172.17.0.2 netmask 255.255.0.0 broadcast 0.0.0.0 |

4 | inet6 fe80::42:acff:fe11:2 prefixlen 64 scopeid 0x20<link> |

5 | ether 02:42:ac:11:00:02 txqueuelen 0 (Ethernet) |

6 | RX packets 20900 bytes 30552079 (29.1 MiB) |

7 | RX errors 0 dropped 0 overruns 0 frame 0 |

8 | TX packets 10581 bytes 578404 (564.8 KiB) |

9 | TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

10 | |

11 | lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 |

12 | inet 127.0.0.1 netmask 255.0.0.0 |

13 | inet6 ::1 prefixlen 128 scopeid 0x10<host> |

14 | loop txqueuelen 0 (Local Loopback) |

15 | RX packets 0 bytes 0 (0.0 B) |

16 | RX errors 0 dropped 0 overruns 0 frame 0 |

17 | TX packets 0 bytes 0 (0.0 B) |

18 | TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 |

none模式

这个模式下Docker拥有自己的Network NameSpace,但是除了lo网卡,并没有其他网卡,也就是这个模式下不能访问网络,可以用于一些测试等等。

1 | [root@INIT docker]# docker run -it --name test --rm --net=none centos /bin/bash |

2 | [root@8f3fc6964940 /]# |

3 | [root@8f3fc6964940 /]# yum install -y net-tools |

4 | Loaded plugins: fastestmirror, ovl |

5 | Could not retrieve mirrorlist http://mirrorlist.centos.org/?release=7&arch=x86_64&repo=os&infra=container error was |

6 | 14: curl#6 - "Could not resolve host: mirrorlist.centos.org; Unknown error" |

7 | |

8 | |

9 | One of the configured repositories failed (Unknown), |

10 | and yum doesn't have enough cached data to continue. At this point the only |

11 | safe thing yum can do is fail. There are a few ways to work "fix" this: |

12 | |

13 | 1. Contact the upstream for the repository and get them to fix the problem. |

14 | .... |

15 | [root@8f3fc6964940 /]#ping 127.0.0.1 |

16 | PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data. |

17 | 64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.035 ms |

18 | 64 bytes from 127.0.0.1: icmp_seq=2 ttl=64 time=0.052 ms |

19 | 64 bytes from 127.0.0.1: icmp_seq=3 ttl=64 time=0.058 ms |

20 | ^C |

21 | --- 127.0.0.1 ping statistics --- |

22 | 3 packets transmitted, 3 received, 0% packet loss, time 1999ms |

23 | rtt min/avg/max/mdev = 0.035/0.048/0.058/0.011 ms |

24 | [root@8f3fc6964940 /]# ping 8.8.8.8 |

25 | connect: Network is unreachable |

好吧,没有网络没法查看下网卡信息。

bridge模式

这个模式对虚拟网络了解的话会很熟悉,就是构建nat网络,此模式会为每一个容器分配 Network Namespace、设置 IP 等,并将一个主机上的 Docker 容器连接到一个虚拟网桥上。当 Docker server 启动时,会在主机上创建一个名为 docker0 的虚拟网桥,此主机上启动的 Docker 容器会连接到这个虚拟网桥上。这种模式下想要访问外网需要iptables的规则参与,做DNAT和SNAT。

一些参见的选项:

–net=bridge 或 default

如果不想启动容器时使用默认的docker0桥接口,需要在运行docker daemon命令时使用

- -b选项:指明要使用的桥

- -bip: 指明桥ip

- –default-gateway: 指明容器的默认网关

- –dns: 指明dns

- –fixed-cidr: 容器分配的地址段

- -H, –host=[]: docker运行的socket文件或监听的ip

- –insecure-registry=[]: 不安全的仓库,在配置私有仓库的时候可能会用到

手动配置bridge网络

1 | ~]# docker run -it --rm --net=none --name base centos /bin/bash #创建一个none网络的容器 |

2 | |

3 | ~]# docker inspect -f '{{.State.Pid}}' base |

4 | 2778 |

5 | ~]# pid=2778 |

6 | ~]# mkdir -p /var/run/netns |

7 | ~]# ln -s /proc/$pid/ns/net /var/run/netns/$pid #创建相对应的网络命名空间 |

8 | |

9 | ~]# ip link add vethA type veth peer name vethB |

10 | ~]# brctl addif docker0 vethA |

11 | ~]# ip link set vethA up #创建一对veth网线,将vethA桥上docker0 |

12 | |

13 | ~]# ip link set vethB netns $pid |

14 | ~]# ip netns exec $pid ip link set dev vethB name eth0 |

15 | ~]# ip netns exec $pid ip addr add 172.16.0.2/16 dev eth0 |

16 | ~]# ip netns exec $pid ip link set eth0 up |

17 | ~]# ip netns exec $pid ip route add default via 172.16.0.1 #对接上虚拟网络空间的peer另一端,并设置路由 |

换桥操作

确保docker服务停止

1~]# systemctl stop docker2~]# systemctl status docker创建自己的桥

1~]# ip link set dev docker0 down2~]# brctl delbr docker03~]# brctl addbr mybr04~]# ip addr add 192.168.1.1/24 dev mybr05~]# ip link set mybr0 up6~]# ip addr show mybr07~]# echo "DOCKER_NETWORK_OPTIONS='-b=mybr0'" > /etc/sysconfig/docker-network

相应地我们也更换了所有容器的ip地址段。

容器互联

除了暴露外网的端口外,另一种跟容器中应用交互的方式,该系统会在源与接收容器之间创建一个隧道。

1 | ~]# docker run -itd --name centos centos /bin/bash |

1 | ~]# docker run -itd --name db --link centos:db centos /bin/bash |

Docker 在两个互联的容器之间创建了一个安全隧道,而且不用映射它们的端口到宿主主机上。在启动 db 容器的时候并没有使用 -p 和 -P 标记,从而避免了暴露数据库端口到外部网络上。

创建点对点的链接

默认docker中的所有虚拟网络都是通过docker0提供虚拟子网的,用户有时候需要两个容器可以直接通信,而不是通过网桥进行桥接,解决方法是使用内核的网络名称空间自己建立一条虚拟网线两头互联。

1 | ~]# docker run -itd --name test1 --net=none centos /bin/bash |

2 | ~]# docker run -itd --name test2 --net=none centos /bin/bash |

找到进程号,并且创建网络名称空间的跟踪文件

1 | ~]# docker inspect -f {{.State.Pid}} test1 |

2 | 31447 |

3 | ~]# docker inspect -f {{.State.Pid}} test2 |

4 | 31530 |

5 | ~]# mkdir -p /var/run/netns |

6 | ~]# ln -s /proc/31447/ns/net /var/run/netns/31447 |

7 | ~]# ln -s /proc/31530/ns/net /var/run/netns/31530 |

创建一对peer接口,然后配置路由:

1 | ~]# ip link add name vethA type veth peer name vethB |

2 | ~]# ip a |

3 | ... |

4 | 85: vethB@vethA: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN qlen 1000 |

5 | link/ether f6:ae:1e:cb:f5:43 brd ff:ff:ff:ff:ff:ff |

6 | 86: vethA@vethB: <BROADCAST,MULTICAST,M-DOWN> mtu 1500 qdisc noop state DOWN qlen 1000 |

7 | link/ether ba:fd:ea:8f:08:9c brd ff:ff:ff:ff:ff:ff |

8 | ~]# ip link set vethA netns 31530 |

9 | ~]# ip netns exec 31530 ip addr add 10.0.0.1/32 dev vethA |

10 | ~]# ip netns exec 31530 ip link set vethA up |

11 | ~]# ip netns exec 31530 ip route add 10.0.0.2/32 dev vethA |

12 | |

13 | |

14 | ~]# ip link set vethB netns 31447 |

15 | ~]# ip netns exec 31447 ip addr add 10.0.0.2/32 dev vethB |

16 | ~]# ip netns exec 31447 ip link set vethB up |

17 | ~]# ip netns exec 31447 ip route add 10.0.0.1/32 dev vethB |

18 | |

19 | ~]# docker exec test1 ping 10.0.0.1 |

20 | PING 10.0.0.1 (10.0.0.1) 56(84) bytes of data. |

21 | 64 bytes from 10.0.0.1: icmp_seq=1 ttl=64 time=0.057 ms |

22 | 64 bytes from 10.0.0.1: icmp_seq=2 ttl=64 time=0.061 ms |

23 | ... |

24 | |

25 | ~]# docker exec test2 ping 10.0.0.2 |

26 | PING 10.0.0.2 (10.0.0.2) 56(84) bytes of data. |

27 | 64 bytes from 10.0.0.2: icmp_seq=1 ttl=64 time=0.052 ms |

28 | 64 bytes from 10.0.0.2: icmp_seq=2 ttl=64 time=0.046 ms |

29 | ... |