keepalived+lvs

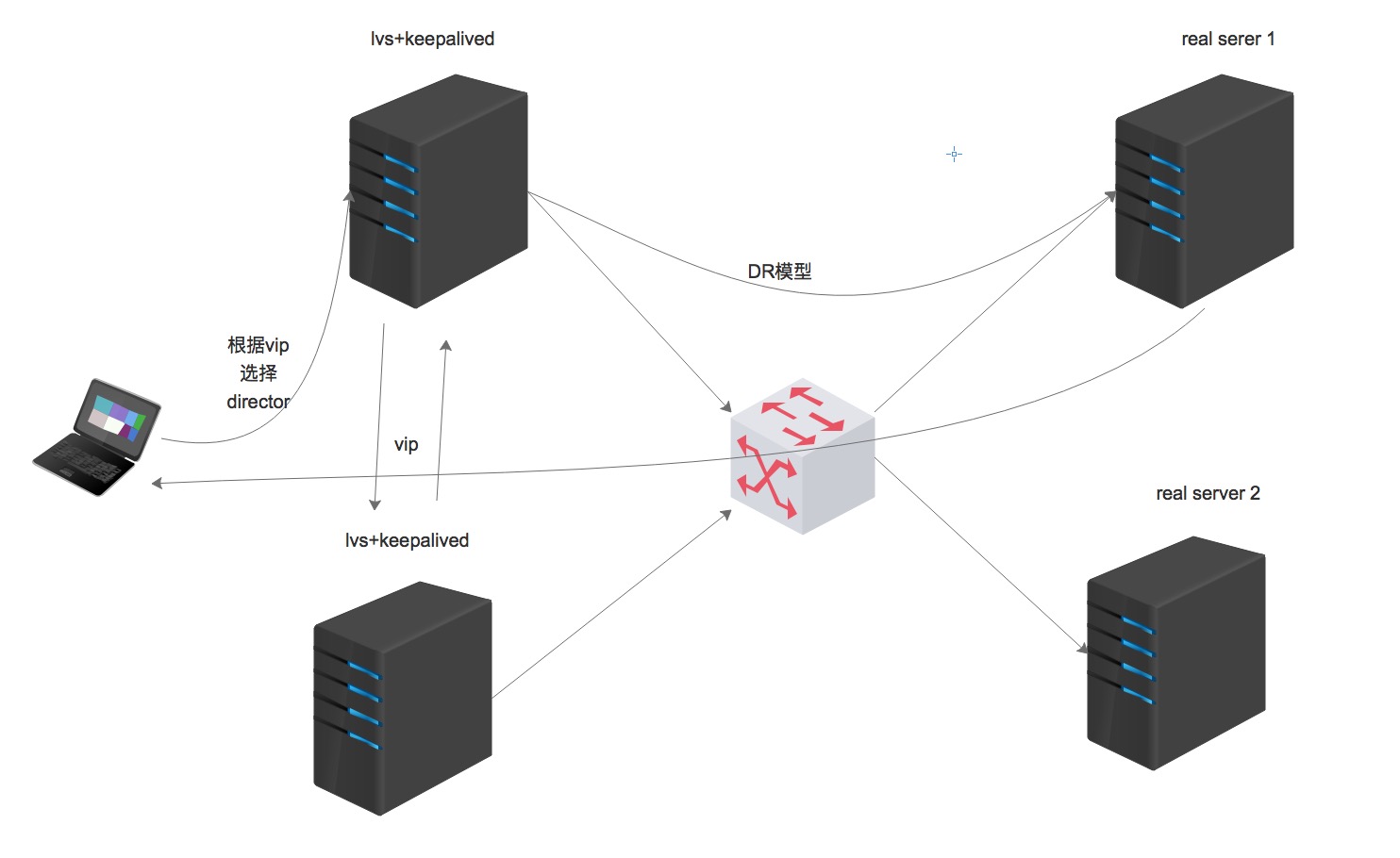

keepalived原生就是对lvs的补充,keepalived为lvs提供了后端服务器的健康状态检查,也为lvs实现动态迁移lvs的real server,并可以实现高可用lvs,所以keepalived对lvs还是很友好的,但是在实际生产环境中,lvs的使用却是很少的,但是keepalived使用却很多,可以高可用haproxy或者nginx等,所以很有必要对keepalived的使用做下实际操练。

实验环境

real server:10.211.55.45 10.211.55.46

lvs+keepalived:10.211.55.43 10.211.55.45

vip: 10.211.55.24

real server 配置

real server的配置都是差不多相同的,所以写个脚本配置:

1 | #!/bin/bash |

2 | |

3 | vip=$1 |

4 | |

5 | case $2 in |

6 | |

7 | start) |

8 | echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore |

9 | echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore |

10 | echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce |

11 | echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce |

12 | |

13 | ip addr add $vip netmask 255.255.255.255 dev lo label lo:0 |

14 | |

15 | route add -host $vip dev lo:0 |

16 | ;; |

17 | stop) |

18 | echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore |

19 | echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore |

20 | echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce |

21 | echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce |

22 | |

23 | ip addr del $vip netmask 255.255.255.255 dev lo label lo:0 |

24 | |

25 | route del -host $vip dev lo:0 |

26 | |

27 | esac |

配置下测试站点:

1 | ~]# yum install -y httpd |

2 | ~]# echo "<h1>real server 1</h1>" > /var/www/html/index.html |

3 | ~]# systemctl start httpd |

两台real server 都需要进行相同的配置,这里不再重复。

配置keepalived

Master 配置

1 | ~]# yum install -y keepalived |

2 | ~]# vim /etc/keepalived/keepalived.conf |

3 | global_defs { |

4 | notification_email { |

5 | root@localhost |

6 | } |

7 | notification_email_from keepalived@localhost |

8 | smtp_server 127.0.0.1 |

9 | smtp_connect_timeout 30 |

10 | router_id node1 |

11 | vrrp_mcast_group4 224.0.100.18 |

12 | } |

13 | |

14 | vrrp_instance VI_1 { |

15 | state MASTER |

16 | interface eth0 |

17 | virtual_router_id 100 |

18 | priority 100 |

19 | advert_int 1 |

20 | authentication { |

21 | auth_type PASS |

22 | auth_pass 1111 |

23 | } |

24 | virtual_ipaddress { |

25 | 10.211.55.24/24 dev eth0 label eth0:0 |

26 | } |

27 | } |

28 | |

29 | virtual_server 10.211.55.24 80 { |

30 | lb_algo wrr #负载均衡的方法 |

31 | delay_loop 2 #定义服务轮询的时间间隔 |

32 | lb_kind DR #集群类型 |

33 | persistence_timeout 0 #持久连接时长 |

34 | protocol TCP #协议 |

35 | sorry_server 127.0.0.1 80 #当real server都无法访问的时候,访问这个 |

36 | real_server 10.211.55.45 80 { #real server |

37 | weight 1 #权重 |

38 | HTTP_GET { #健康状态检测方法 |

39 | url { #访问的url配置 |

40 | path / #访问的路径 |

41 | status_code 200 #返回的状态码 |

42 | } |

43 | connect_timeout 30 #连接的超时时间 |

44 | nb_get_retry 3 #重试的次数 |

45 | delay_before_retry 3 #每次重试的时间间隔 |

46 | |

47 | |

48 | } |

49 | } |

50 | |

51 | |

52 | real_server 10.211.55.46 80 { |

53 | weight 2 |

54 | HTTP_GET { |

55 | url { |

56 | path / |

57 | status_code 200 |

58 | } |

59 | connect_timout 3 |

60 | nb_get_retry 3 |

61 | delay_before_retry 3 |

62 | |

63 | |

64 | } |

65 | } |

66 | } |

Backup端配置

1 | ~]# yum install -y keepalived |

2 | ~]# vim /etc/keepalived/keepalived.conf |

3 | global_defs { |

4 | notification_email { |

5 | root@localhost |

6 | } |

7 | notification_email_from keepalived@localhost |

8 | smtp_server 127.0.0.1 |

9 | smtp_connect_timeout 30 |

10 | router_id node2 |

11 | vrrp_mcast_group4 224.0.100.18 |

12 | } |

13 | |

14 | vrrp_instance VI_1 { |

15 | state BACKUP |

16 | interface eth0 |

17 | virtual_router_id 100 |

18 | priority 95 |

19 | advert_int 1 |

20 | authentication { |

21 | auth_type PASS |

22 | auth_pass 1111 |

23 | } |

24 | virtual_ipaddress { |

25 | 10.211.55.24/24 dev eth0 label eth0:0 |

26 | } |

27 | } |

28 | |

29 | virtual_server 10.211.55.24 80 { |

30 | lb_algo wrr #负载均衡的方法 |

31 | delay_loop 2 #定义服务轮询的时间间隔 |

32 | lb_kind DR #集群类型 |

33 | persistence_timeout 0 #持久连接时长 |

34 | protocol TCP #协议 |

35 | sorry_server 127.0.0.1 80 #当real server都无法访问的时候,访问这个 |

36 | real_server 10.211.55.45 80 { #real server |

37 | weight 1 #权重 |

38 | HTTP_GET { #健康状态检测方法 |

39 | url { #访问的url配置 |

40 | path / #访问的路径 |

41 | status_code 200 #返回的状态码 |

42 | } |

43 | connect_timeout 30 #连接的超时时间 |

44 | nb_get_retry 3 #重试的次数 |

45 | delay_before_retry 3 #每次重试的时间间隔 |

46 | |

47 | |

48 | } |

49 | } |

50 | |

51 | |

52 | real_server 10.211.55.46 80 { |

53 | weight 2 |

54 | HTTP_GET { |

55 | url { |

56 | path / |

57 | status_code 200 |

58 | } |

59 | connect_timout 3 |

60 | nb_get_retry 3 |

61 | delay_before_retry 3 |

62 | |

63 | |

64 | } |

65 | } |

66 | } |

测试

先启动BACKUP端的keepalived,我们可以看见BACKUP端接管起了lvs的调度任务:

1 | ~]# systemctl start keepalived |

2 | ~]# ipvsadm -L -n |

3 | IP Virtual Server version 1.2.1 (size=4096) |

4 | Prot LocalAddress:Port Scheduler Flags |

5 | -> RemoteAddress:Port Forward Weight ActiveConn InActConn |

6 | TCP 10.211.55.24:80 wrr |

7 | -> 10.211.55.45:80 Route 1 0 0 |

8 | -> 10.211.55.46:80 Route 2 0 0 |

9 | ~]# tail -f /var/log/messages |

10 | Apr 26 15:24:32 localhost Keepalived[9630]: Starting Keepalived v1.2.13 (11/05,2016) |

11 | Apr 26 15:24:32 localhost Keepalived[9631]: Starting Healthcheck child process, pid=9632 |

12 | Apr 26 15:24:32 localhost Keepalived[9631]: Starting VRRP child process, pid=9633 |

13 | Apr 26 15:24:32 localhost Keepalived_vrrp[9633]: Netlink reflector reports IP 10.211.55.43 added |

14 | Apr 26 15:24:32 localhost Keepalived_vrrp[9633]: Registering Kernel netlink reflector |

15 | Apr 26 15:24:32 localhost Keepalived_vrrp[9633]: Registering Kernel netlink command channel |

16 | Apr 26 15:24:32 localhost Keepalived_vrrp[9633]: Registering gratuitous ARP shared channel |

17 | Apr 26 15:24:32 localhost Keepalived_vrrp[9633]: Opening file '/etc/keepalived/keepalived.conf'. |

18 | Apr 26 15:24:32 localhost Keepalived_vrrp[9633]: Configuration is using : 63190 Bytes |

19 | Apr 26 15:24:32 localhost Keepalived_vrrp[9633]: Using LinkWatch kernel netlink reflector... |

20 | Apr 26 15:24:32 localhost Keepalived_vrrp[9633]: VRRP_Instance(VI_1) Entering BACKUP STATE |

21 | Apr 26 15:24:32 localhost Keepalived_vrrp[9633]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] |

22 | Apr 26 15:24:32 localhost systemd: Started LVS and VRRP High Availability Monitor. |

23 | Apr 26 15:24:32 localhost Keepalived_healthcheckers[9632]: Netlink reflector reports IP 10.211.55.43 added |

24 | Apr 26 15:24:32 localhost Keepalived_healthcheckers[9632]: Registering Kernel netlink reflector |

25 | Apr 26 15:24:32 localhost Keepalived_healthcheckers[9632]: Registering Kernel netlink command channel |

26 | Apr 26 15:24:32 localhost Keepalived_healthcheckers[9632]: Opening file '/etc/keepalived/keepalived.conf'. |

27 | Apr 26 15:24:32 localhost Keepalived_healthcheckers[9632]: Configuration is using : 17225 Bytes |

28 | Apr 26 15:24:32 localhost Keepalived_healthcheckers[9632]: Using LinkWatch kernel netlink reflector... |

29 | Apr 26 15:24:32 localhost Keepalived_healthcheckers[9632]: Activating healthchecker for service [10.211.55.45]:80 |

30 | Apr 26 15:24:32 localhost Keepalived_healthcheckers[9632]: Activating healthchecker for service [10.211.55.46]:80 |

31 | Apr 26 15:24:35 localhost Keepalived_vrrp[9633]: VRRP_Instance(VI_1) Transition to MASTER STATE |

32 | Apr 26 15:24:36 localhost Keepalived_vrrp[9633]: VRRP_Instance(VI_1) Entering MASTER STATE |

33 | Apr 26 15:24:36 localhost Keepalived_vrrp[9633]: VRRP_Instance(VI_1) setting protocol VIPs. |

34 | Apr 26 15:24:36 localhost Keepalived_vrrp[9633]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 10.211.55.24 |

35 | Apr 26 15:24:36 localhost Keepalived_healthcheckers[9632]: Netlink reflector reports IP 10.211.55.24 added |

36 | Apr 26 15:24:41 localhost Keepalived_vrrp[9633]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 10.211.55.24 |

37 | |

38 | 测试访问vip |

39 | ~]# curl 10.211.55.24 |

40 | <h1>real server 2</h1> |

41 | ~]# curl 10.211.55.24 |

42 | <h1>real server 2</h1> |

43 | ~]# curl 10.211.55.24 |

44 | <h1>real server 1</h1> |

启动MASTER端的keepalived,MASTER的优先级比BACKUP端的优先级高,立马接管了BACKUP的任务:

1 | ~]# systemctl start keepalived |

2 | ~]# ]# ipvsadm -L -n |

3 | IP Virtual Server version 1.2.1 (size=4096) |

4 | Prot LocalAddress:Port Scheduler Flags |

5 | -> RemoteAddress:Port Forward Weight ActiveConn InActConn |

6 | TCP 10.211.55.24:80 wrr |

7 | -> 10.211.55.45:80 Route 1 0 0 |

8 | -> 10.211.55.46:80 Route 2 0 0 |

再看下BACKUP端的日志

1 | ~]#tail -f /var/log/messages |

2 | Apr 26 15:32:31 localhost Keepalived_vrrp[9633]: VRRP_Instance(VI_1) Received higher prio advert |

3 | Apr 26 15:32:31 localhost Keepalived_vrrp[9633]: VRRP_Instance(VI_1) Entering BACKUP STATE |

4 | Apr 26 15:32:31 localhost Keepalived_vrrp[9633]: VRRP_Instance(VI_1) removing protocol VIPs. |

5 | Apr 26 15:32:31 localhost Keepalived_healthcheckers[9632]: Netlink reflector reports IP 10.211.55.24 removed |

我们可以通过keepalived来配置lvs调度

我们来试试关闭其中一台real server,lvs的规则因为有keepalived的健康状态检查,会立马剔除不健康的节点,当节点恢复还会重新加入集群:

1 | ~]# systemctl stop httpd |

1 | ~]# tail -f /var/log/messages |

2 | Apr 25 21:21:01 localhost Keepalived_healthcheckers[24360]: Error connecting server [10.211.55.45]:80. |

3 | Apr 25 21:21:01 localhost Keepalived_healthcheckers[24360]: Removing service [10.211.55.45]:80 from VS [10.211.55.24]:80 |

4 | Apr 25 21:21:01 localhost Keepalived_healthcheckers[24360]: Remote SMTP server [127.0.0.1]:25 connected. |

5 | Apr 25 21:21:01 localhost Keepalived_healthcheckers[24360]: SMTP alert successfully sent. |

6 | ~]# ipvsadm -L -n |

7 | IP Virtual Server version 1.2.1 (size=4096) |

8 | Prot LocalAddress:Port Scheduler Flags |

9 | -> RemoteAddress:Port Forward Weight ActiveConn InActConn |

10 | TCP 10.211.55.24:80 wrr |

11 | -> 10.211.55.46:80 Route 2 0 0 |

12 | ~]# mail |

13 | Heirloom Mail version 12.5 7/5/10. Type ? for help. |

14 | "/var/spool/mail/root": 11 messages 11 new |

15 | >N 1 keepalived@localhost Tue Apr 25 19:45 17/658 "[node1] Realserver [10.211.55.46]:80 - DOWN" |

我们来关闭全部的real server,来测试下我们的全部real server全部宕机了,则会启动维护页:

1 | ~]# tail -f /var/log/messages |

2 | Apr 25 21:24:12 localhost Keepalived_healthcheckers[24360]: Error connecting server [10.211.55.46]:80. |

3 | Apr 25 21:24:12 localhost Keepalived_healthcheckers[24360]: Removing service [10.211.55.46]:80 from VS [10.211.55.24]:80 |

4 | Apr 25 21:24:12 localhost Keepalived_healthcheckers[24360]: Lost quorum 1-0=1 > 0 for VS [10.211.55.24]:80 |

5 | Apr 25 21:24:12 localhost Keepalived_healthcheckers[24360]: Adding sorry server [127.0.0.1]:80 to VS [10.211.55.24]:80 |

6 | Apr 25 21:24:12 localhost Keepalived_healthcheckers[24360]: Removing alive servers from the pool for VS [10.211.55.24]:80 |

7 | Apr 25 21:24:12 localhost Keepalived_healthcheckers[24360]: Remote SMTP server [127.0.0.1]:25 connected. |

8 | Apr 25 21:24:13 localhost Keepalived_healthcheckers[24360]: SMTP alert successfully sent. |

9 | |

10 | ~]# ipvsadm -L -n |

11 | IP Virtual Server version 1.2.1 (size=4096) |

12 | Prot LocalAddress:Port Scheduler Flags |

13 | -> RemoteAddress:Port Forward Weight ActiveConn InActConn |

14 | TCP 10.211.55.24:80 wrr |

15 | -> 127.0.0.1:80 Route 1 0 0 |

16 | |

17 | ~]# curl 10.211.55.24 |

18 | <h1>sorry server 1</h1> |

总结

keepalived在所有高可用集群架构中是最容易实现的,也是应用的最广的,但是keepalived在实现资源浮动的时候是很有限的,从keepalived+lvs的过程中,其实也只不过是实现了vip的浮动,而keepalived本身对lvs有很好的补充功能才实现了高可用lvs的过程,但keepalived+lvs确实已经可以在企业级别使用的了。